Artificial Intelligence: A Guide for Thinking Humans

Artificial Intelligence: A Guide for Thinking Humans No recent scientific enterprise has proved as alluring, terrifying, and filled with extravagant promise and frustrating setbacks as artificial intelligence. The award-winning author Melanie Mitchell, a leading computer scientist, now reveals AI’s turbulent history and the recent spate of apparent successes, grand hopes, and emerging fears surrounding it. In Artificial Intelligence , Mitchell turns to the most urgent questions concerning AI today: How intelligent—really—are the best AI programs? How do they work? What can they actually do, and when do they fail? How humanlike do we expect them to become, and how soon do we need to worry about them surpassing us? Along the way, she introduces the dominant models of modern AI and machine learning, describing cutting-edge AI programs, their human inventors, and the historical lines of thought underpinning recent achievements. She meets with fellow experts such as Douglas Hofstadter, the cognitive scientist and Pulitzer Prize–winning author of the modern classic Gödel, Escher, Bach , who explains why he is “terrified” about the future of AI. She explores the profound disconnect between the hype and the actual achievements in AI, providing a clear sense of what the field has accomplished and how much further it has to go. Interweaving stories about the science of AI and the people behind it, Artificial Intelligence brims with clear-sighted, captivating, and accessible accounts of the most interesting and provocative modern work in the field, flavored with Mitchell’s humor and personal observations. This frank, lively book is an indispensable guide to understanding today’s AI, its quest for “human-level” intelligence, and its impact on the future for us all.

General Artificial Intelligence is still far away from humanity, if even possible one day. Existing AIs are narrow in its scope and focused on solving specific sort of problems. but they lack essential human cognitive abilities like reasoning, counterfactuals, ontological understanding of the world. or as the author puts it “Common Sense”. The book was inspired of a meeting between the author and her professor AI Legend Douglas Hofstadter and a group of AI researcher at google. where Hofstadter expressed deep worry about the state and recent progress exhibited in AI community. Hofstadter thought that “human-level” AI had no chance of occurring in his (or even his children’s) lifetime, so he wasn’t worried much about it. In the mid-1990s, Hofstadter’s confidence in his assessment of AI was shaken, this time quite profoundly, when he encountered a program written by a musician, David Cope. The program was called Experiments in Musical Intelligence, or EMI (pronounced “Emmy”). Cope, a composer and music professor, had originally developed EMI to aid him in his own composing process by automatically creating pieces in Cope’s specific style. However, EMI became famous for creating pieces in the style of classical composers such as Bach and Chopin. Hofstadter was terrified that intelligence, creativity, emotions, and maybe even consciousness itself would be too easy to produce—that what he valued most in humanity would end up being nothing more than a “bag of tricks,” that a superficial set of brute-force algorithms could explain the human spirit. He fears that AI might show us that the human qualities we most value are disappointingly simple to mechanize. “If such minds of infinite subtlety and complexity and emotional depth could be trivialized by a small chip, it would destroy my sense of what humanity is about.”

The Main Theme

The book is the author attempt to understand the true state of affairs in artificial intelligence. What computers can do now,and what we can expect from them over the next decades?. The author claims that the book is not a count of history of artificial intelligence history. or a survey of opinion and ideas of the community. but rather in-depth exploration of some of the AI methods the exists today affects our life, and soon may challenge our existing. I don’t think the author was completely successful in making this claim, the book in its majority is a history of key milestones in AI and transformation steps in AI research (which is well written and concise). The author explored such milestones and transitive research in great technical details with a very accessible language to non-technical users. but she didn’t spend much enough time presenting her argument and backing it up with evidence. In short the balance between historical and argumentative narrative was not in favor of the author arguments; nevertheless the author was very successful in presenting a short and compiling story of how AI progressed over the past 50 years and how did we arrive to the current state of affairs in machine intelligence.

The Cradle of Artificial Intelligence

The author started the journey by the the small workshop in 1956 at Dartmouth College organized by a young mathematician John McCarthy, who had become intrigued with the idea of creating a thinking machine. McCarthy persuaded Minsky, Shannon, and Rochester to help him organize “a 2 month, 10 man study of artificial intelligence to be carried out during the summer of 1956.” The term artificial intelligence was McCarthy’s invention; he wanted to distinguish this field from a related effort called cybernetics. McCarthy later admitted that no one really liked the name—after all, the goal was genuine, not “artificial,” intelligence—but “I had to call it something, so I called it ‘Artificial Intelligence.’”. McCarthy and colleagues were optimistic that AI was in close reach: “We think that a significant advance can be made in one or more of these problems if a carefully selected group of scientists work on it together for a summer.” .Obstacles soon arose that would be familiar to anyone organizing a scientific workshop today. The Rockefeller Foundation came through with only half the requested amount of funding. And it turned out to be harder than McCarthy had thought to persuade the participants to actually come and then stay, not to mention agree on anything. Since the summer of AI, research took two main direction Symbolic AI - A symbolic AI program’s knowledge consists of words or phrases (the “symbols”), typically understandable to a human, along with rules by which the program can combine and process these symbols in order to perform its assigned task. and early AI program would be called the general problem solver. or GPS. the cognitive scientists Herbert Simon and Allen Newell, had recorded several students “thinking out loud” while solving this and other logic puzzles. Simon and Newell then designed their program to mimic what they believed were the students’ thought processes. Advocates of the symbolic approach to AI argued that to attain intelligence in computers, it would not be necessary to build programs that mimic the brain. Instead, the argument goes, general intelligence can be captured entirely by the right kind of symbol-processing program.

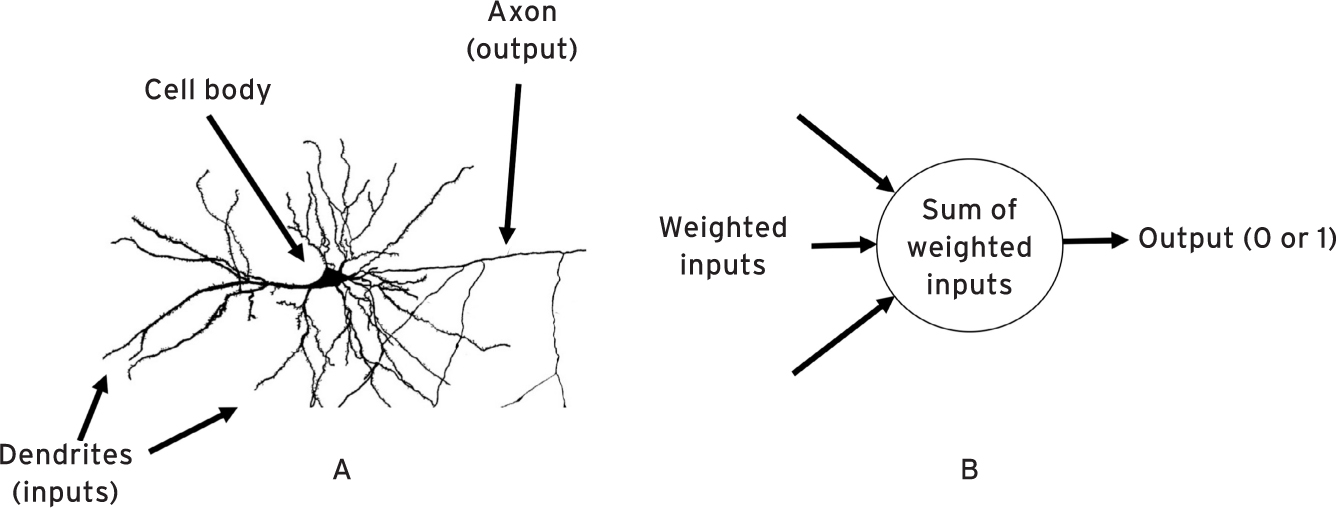

The Other main direction in AI is Subsymbolic AI. Symbolic AI was originally inspired by mathematical logic as well as by the way people described their conscious thought processes. In contrast, subsymbolic approaches to AI took inspiration from neuroscience and sought to capture the sometimes-unconscious thought processes underlying what some have called fast perception, such as recognizing faces or identifying spoken words. Subsymbolic AI programs do not contain the kind of human-understandable language, Instead, a subsymbolic program is essentially a stack of equations—a thicket of often hard-to-interpret operations on numbers. An early example of a subsymbolic, brain-inspired AI program was the perceptron, invented in the late 1950s by the psychologist Frank Rosenblatt. but the perceptron was an important milestone in AI and was the influential great-grandparent of modern AI’s most successful tool, deep neural networks.

Rosenblatt’s invention of perceptron was inspired by the way in which neurons process information. A neuron is a cell in the brain that receives electrical or chemical input from other neurons that connect to it. Roughly speaking, a neuron sums up all the inputs it receives from other neurons, and if the total sum reaches a certain threshold level, the neuron fires. Importantly, different connections (synapses) from other neurons to a given neuron have different strengths; in calculating the sum of its inputs, the given neuron gives more weight to inputs from stronger connections than inputs from weaker connections. Neuroscientists believe that adjustments to the strength of connections between neurons is a key part of how learning takes place in the brain.

Cycles of AI

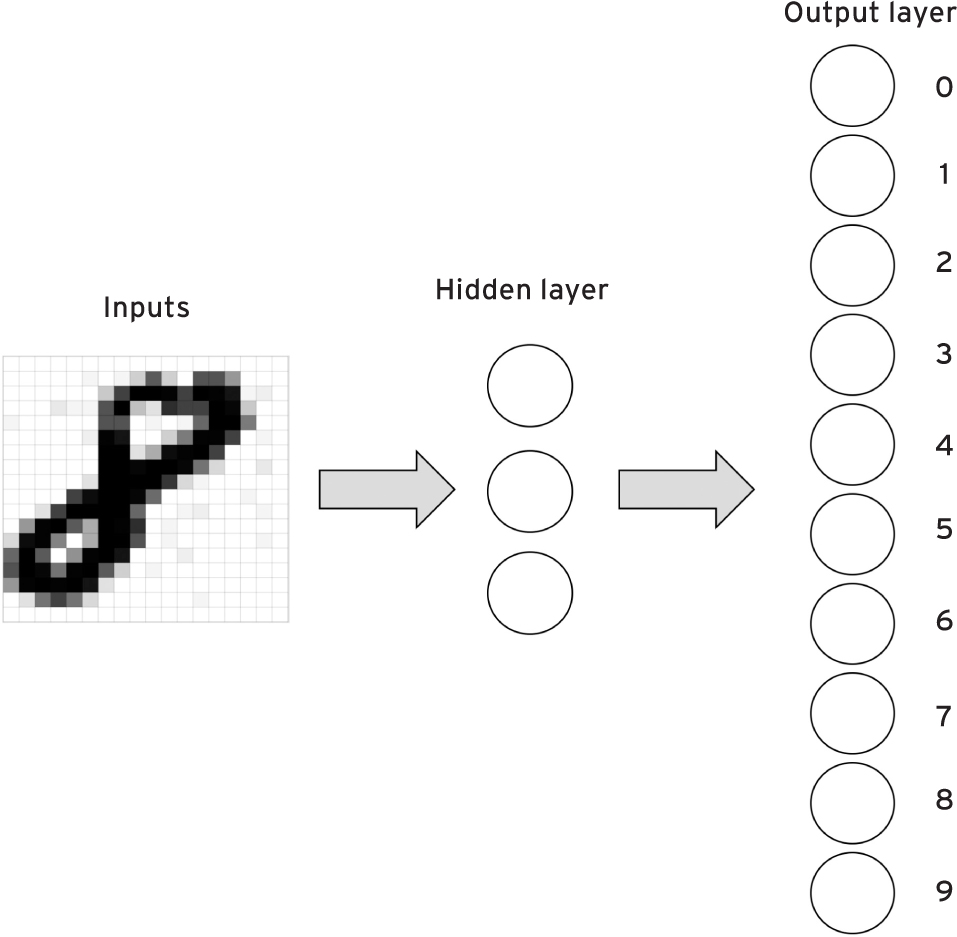

Unlike the symbolic General Problem Solver system, a perceptron doesn’t have any explicit rules for performing its task; all of its “knowledge” is encoded in the numbers making up its weights and threshold. Minsky and Papert pointed out that if a perceptron is augmented by adding a “layer” of simulated neurons, the types of problems that the device can solve is, in principle, much broader. A perceptron with such an added layer is called a multilayer neural network. Such networks form the foundations of much of modern AI. After Minsky and Papert critic to perceptron, AI went into another winter waiting for another break through.

This was an early example of a repeating cycle of bubbles and crashes in the field of AI. The two-part cycle goes like this.

- Phase 1: New ideas create a lot of optimism in the research community. Results of imminent AI breakthroughs are promised, and often hyped in the news media. Money pours in from government funders and venture capitalists for both academic research and commercial start-ups.

- Phase 2: The promised breakthroughs don’t occur, or are much less impressive than promised. Government funding and venture capital dry up. Start-up companies fold, and AI research slows.

This pattern became familiar to the AI community: “AI spring,” followed by overpromising and media hype, followed by “AI winter.”

Neural Networks

a multilayer neural network can learn to use its hidden units to recognize more abstract features (for example, visual shapes, such as the top and bottom “circles” on a handwritten 8) than the simple features (for example, pixels) encoded by the input. In general, it’s hard to know ahead of time how many layers of hidden units are needed, or how many hidden units should be included in a layer, for a network to perform well on a given task. Most neural network researchers use a form of trial and error to find the best settings.

By the late 1970s and early ’80s, several of these groups had definitively rebutted Minsky and Papert’s speculations on the “sterility” of multilayer neural networks by developing a general learning algorithm—called back-propagation—for training these networks. back-propagation is a way to take an error observed at the output units and to “propagate” the blame for that error backward, so as to assign proper blame to each of the weights in the network. This allows back-propagation to determine how much to change each weight in order to reduce the error. Learning in neural networks simply consists in gradually modifying the weights on connections so that each output’s error gets as close to 0 as possible on all training examples.

In the 1980s, the most visible group working on neural networks was a team at the University of California at San Diego headed by two psychologists, David Rumelhart and James McClelland. What we now call neural networks were then generally referred to as connectionist networks, where the term connectionist refers to the idea that knowledge in these networks resides in weighted connections between units. The team led by Rumelhart and McClelland is known for writing the so-called bible of connectionism—a two-volume treatise, published in 1986, called Parallel Distributed Processing. In the midst of an AI landscape dominated by symbolic AI, the book was a pep talk for the subsymbolic approach, arguing that “people are smarter than today’s computers because the brain employs a basic computational architecture that is more suited to.

Machine Learning

Inspired by statistics and probability theory, AI researchers developed numerous algorithms that enable computers to learn from data, and the field of machine learning became its own independent subdiscipline of AI, intentionally separate from symbolic AI. Machine-learning researchers disparagingly referred to symbolic AI methods as good old-fashioned AI, or GOFAI (pronounced “go-fye”) and roundly rejected them. Over the next two decades, machine learning had its own cycles of optimism, government funding, start-ups, and overpromising, followed by the inevitable winters. Training neural networks and similar methods to solve real-world problems could be glacially slow, and often didn’t work very well, given the limited amount of data and computer power available at the time. But more data and computing power were coming shortly. The explosive growth of the internet would see to that. The stage was set for the next big AI revolution.

The Author spent the rest of the book exploring and highlighting successes and failures of Machine Learning approach to machine intelligence from IBM’s Deep Blue chess-playing system defeated the world chess champion Garry Kasparov. to Google launched its automated language-translation service, Google Translate, Google’s self-driving cars, Virtual assistants such as Apple’s Siri and Amazon’s Alexa, Facebook face detection algorithm in pictures, IBM’s Watson program roundly defeated human champions on television’s Jeopardy! game show, to AlphaGo stunningly defeated one of the world’s best players in four out of five games.

The buzz over artificial intelligence was quickly becoming deafening, and the commercial world took notice. All of the largest technology companies have poured billions of dollars into AI research and development, either hiring AI experts directly or acquiring smaller start-up companies for the sole purpose of grabbing (“acqui-hiring”) their talented employees. The potential of being acquired, with its promise of instant millionaire status, has fueled a proliferation of start-ups, often founded and run by former university professors, each with his or her own twist on AI. As the technology journalist Kevin Kelly observed, “The business plans of the next 10,000 startups are easy to forecast: Take X and add AI.” And, crucially, for nearly all of these companies, AI has meant “deep learning.”

AI spring is once again in full bloom.

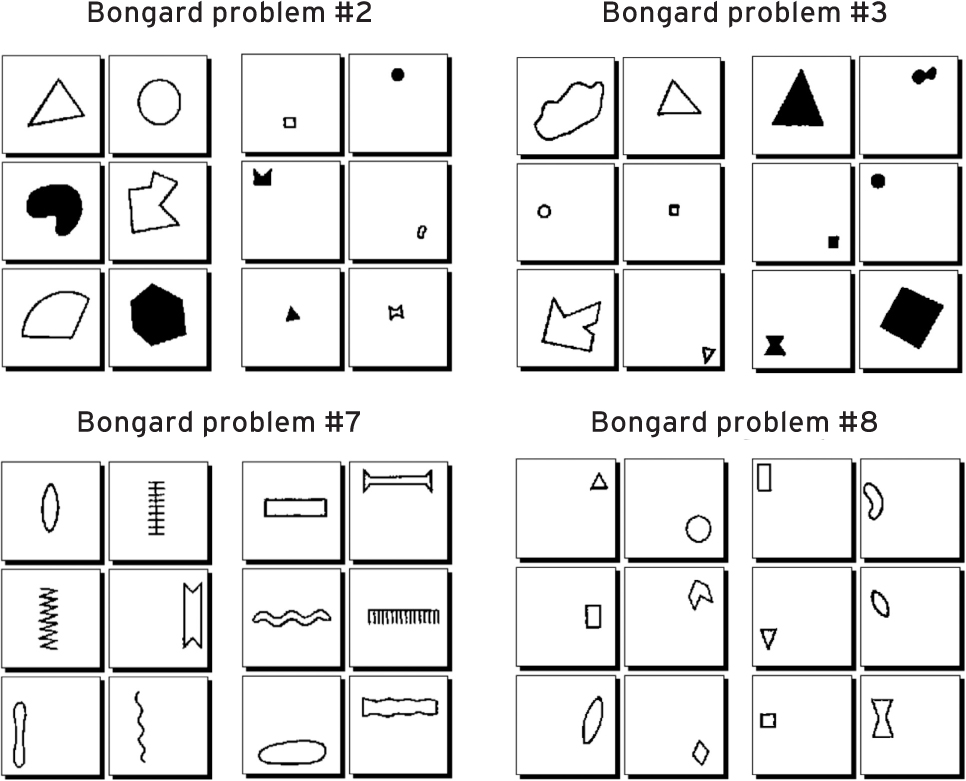

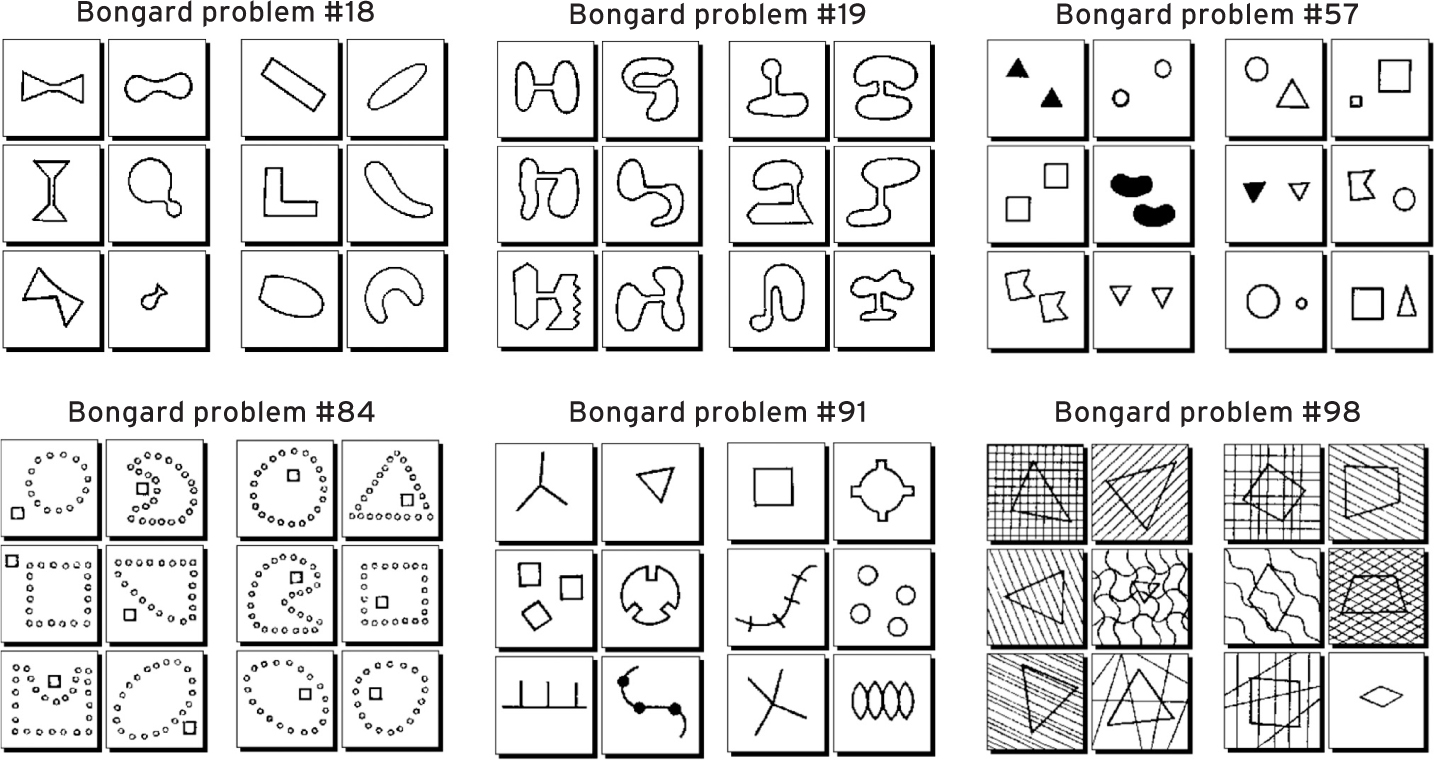

Barrier of Meaning

The Author argues that AI has no chance to break the barrier meaning. The phrase “barrier of meaning” perfectly captures an idea that has permeated this book: humans, in some deep and essential way, understand the situations they encounter, whereas no AI system yet possesses such understanding. While state-of-the-art AI systems have nearly equaled (and in some cases surpassed) humans on certain narrowly defined tasks, these systems all lack a grasp of the rich meanings humans bring to bear in perception, language, and reasoning. This lack of understanding is clearly revealed by the un-humanlike errors these systems can make; by their difficulties with abstracting and transferring what they have learned; by their lack of commonsense knowledge; and by their vulnerability to adversarial attacks. The barrier of meaning between AI and human-level intelligence still stands today. The Author then goes an tries to construct a framework for human understanding posing the 3 main challenges to current AI systems: Knowledge, Abstraction, and Analogy.

We are Really, Really Far Away

The final claim of the author that “We are Really, Really Far Away”! The modern age of artificial intelligence is dominated by deep learning, with its triumvirate of deep neural networks, big data, and ultrafast computers. However, in the quest for robust and general intelligence, deep learning may be hitting a wall: the all-important “barrier of meaning.” - In his post titled “The State of Computer Vision and AI: We Are Really, Really Far Away,” Andrej Karpathy, the deep-learning and computer-vision expert who now directs AI efforts at Tesla describes his reactions, as a computer-vision researcher, to one specific photo, shown in figure . Karpathy notes that we humans find this image quite humorous, and asks, “What would it take for a computer to understand this image as you or I do?”

Karpathy lists many of the things we humans easily understand but that remain beyond the abilities of today’s best computer-vision programs. For example, we recognize that there are people in the scene, but also that there are mirrors, so some of the people are reflections in those mirrors. We recognize the scene as a locker room and we are struck by the oddity of seeing a bunch of people in suits in a locker-room setting.

Furthermore, we recognize that a person is standing on a scale, even though the scale is made up of white pixels that blend in with the background. Karpathy points out that we recognize that “Obama has his foot positioned just slightly on top of the scale,” and notes that we easily describe this in terms of the three-dimensional structure of the scene we infer rather than the two-dimensional image that we are given. Our intuitive knowledge of physics lets us reason that Obama’s foot will cause the scale to overestimate the weight of the person on the scale. Our intuitive knowledge of psychology tells us that the person on the scale is not aware that Obama is also stepping on the scale—we infer this from the person’s direction of gaze, and we know that he doesn’t have eyes in the back of his head. We also understand that the person probably can’t sense the slight push of Obama’s foot on the scale. Our theory of mind further lets us predict that the man will not be happy when the scale shows his weight to be higher than he expected.

Finally, we recognize that Obama and the other people observing this scene are smiling—we infer from their expressions that they are amused by the trick Obama is playing on the man on the scale, possibly made funnier because of Obama’s status. We also recognize that their amusement is friendly, and that they expect the man on the scale to himself laugh when he is let in on the joke. Karpathy notes: “You are reasoning about [the] state of mind of people, and their view of the state of mind of another person. That’s getting frighteningly meta.”

In summary, “It is mind-boggling that all of the above inferences unfold from a brief glance at a 2D array of [pixel] values.”

The book is a must read regardless if you agree or not with the author position on how far off we are from human level artificial intelligence. The simple language, entertaining narrative, and no lack of tech details is a great education on the state of the art of machine intelligence research and challenges today.

About the Author

Melanie Mitchell has a Ph.D. in computer science from the University of Michigan, where she studied with the cognitive scientist and writer Douglas Hofstadter; together, they created the Copycat program, which makes creative analogies in an idealized world. The author or editor of five books and numerous scholarly papers, she is currently professor of computer science at Portland State University and external professor at the Santa Fe Institute.

Leave a Comment